|

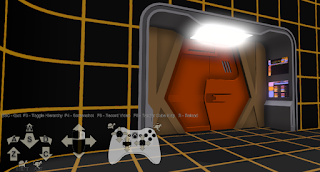

| Mike Mara using a G3D::VRApp game with physics |

Virtual reality research isn't just about rendering algorithms and tracking devices. Software engineering for new kinds of input and output is always an important challenge.

This article describes the upcoming API and scene graph design for the G3D Innovation Engine's experimental VR library, focusing on camera design.

The new design is a refinement of the original one that I published about a year ago. It is similar to how Unity's VR support works and is based on many discussions with their team and other game developers. My collaborators, my students, and I use this API regularly with HTC Vive, Oculus Rift, and Oculus DK2 VR systems.

The changes that we accumulated in the last year are based on experience creating many VR experiments and demos.

While the G3D implementation is available to everyone and this documents it, my primary goal in this article is to explain the design process for VR developers facing similar challenges in other engines.

Traditional Graphics Cameras

The camera entity in most non-virtual reality first-person games is bolted to the player character at head or chest height. The character moves according to simulation rules based on player gamepad or keyboard input, and the camera simply moves with the character. In as much as there is a "scene graph", it is simply that the player character is in world space (or temporarily parented to a vehicle or moving platform) and the camera is parented to the character.VR Camera Challenges

Head Tracking (Real Movement)

|

| Room-scale VR schematic from the HTC Vive manual |

What should the VR system do when the real-world movement places the camera inside of a solid virtual object? How should the rendered player avatar respond to real-world movement to prevent the rendered avatar from being decapitated by extreme real head movement?

Virtual Movement

In-game movement may be affected by flying the virtual camera or moving a character avatar. That movement may be via teleportation, gestural input creating physics system simulation, spline-based motion tracks, or controller-based input. |

| Default G3D virtual movement controls |

Although no current commercial VR system tracks the player's real-world body, the virtual body is frequently used for collision detection against the world to prevent players from breaking gameplay by stepping through virtual walls.

Does virtual movement move the head? The tracked volume? The virtual body? How do we maintain the parenting relationships between these? What

Generality Constraints

In the rare case where you are building a single application for a dedicated demonstration installation, then you can dictates the movement mechanics and VR platform for the experience. For example, teleportation and standing VR.However, an application that must work with multiple VR platforms (the only current economically-viable strategy today) requires a flexible design that can satisfy multiple movement mechanics and VR platforms with a single scene graph. For a game engine, the VR design should ideally work even with scenes originally authored without VR support by upgrading the scene graph to support a tracked head and somehow adapting the screen-based rendering to stereo VR rendering.

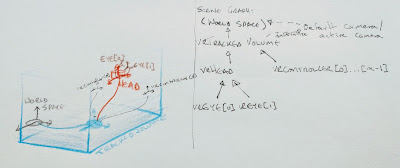

A Scene Graph

Below is our current solution to a VR scene graph that works with legacy desktop scenes. After scene load, the G3D::VRApp automatically injects multiple dynamically-constructed objects into the scene graph:

- vrTrackedVolume is an (invisible) G3D::MarkerEntity with bounds matching the tracked volume as reported by the SteamVR/OculusVR driver. It is initialized to the scene's default camera position in world space, but dropped to the nearest surface vertically downwards by a ray cast and has all roll and pitch removed.

- vrHead is a G3D::MarkerEntity that is a simulation child of VRTrackedVolume. That is, VRHead is in the object space of vrTrackedVolume. The position of vrHead in that object space is updated each frame based on real-time tracking data from the VR driver.

- vrEye[0] and vrEye[1] are G3D::Cameras that are simulation children of vrHead using offsets queried from the VR driver. They have projection matrices defined by the VR driver and all other parameters copied from the scene's default camera.

- vrController[0...n - 1] are G3D::MarkerEntitys representing the tracked non-head objects, which are the game controllers/"wands" under current VR systems. These are simulation children of vrTrackedVolume.

- debugCamera is a G3D::Camera which is always inserted by any G3D::GApp. G3D::VRApp retains this behavior.

Motion

The default camera manipulator in G3D::GApp is a G3D::FirstPersonManipulator that interprets gamepad or mouse + keyboard input as moving the debugCamera when it is set as the G3D::GApp::activeCamera. G3D::VRApp retains this behavior, but also moves the vrTrackedVolume to move with the defaultCamera when it is active. |

| Prototype new controllers for HTC Vive |

The vrHead's world space position changes due to virtual movement because it is a child of the vrTrackedVolume. If you want to test for collisions, you have to do so based on a body inferred from the vrHead or the vrHead itself, not from the vrTrackedVolume that is creating the motion.

Pressing the "B" button on a game pad, middle mouse button, or touch pad on Vive teleports the vrTrackedVolume a position in front and below the current camera based on a ray cast.

This default behavior is useful for debugging and demos, and allows importing any G3D scene (or retroactively substituting VRApp for GApp as a base class for many programs). When an experience has a specific movement mechanic that is tailored to its simulation and content, that experience can replace this default manipulator with its own.

I'm debating whether the default manipulator should apply rigid body physics or ray casts to prevent the vrHead from moving through walls. My current inclination is not to because the default behavior is typically used for debugging and full control is best in that situation. However, it might be helpful to provide a built-in manipulator with this behavior that developers can easily switch to. I like the Vanishing Realms mechanic of pushing the vrTrackedVolume backwards when the player's real or virtual motion moves the vrHead inside of a virtual object, instead of the The Climb solution of disconcertingly blacking out the screen.

Rendering

The VRApp::onGraphics method automatically runs the GApp::onGraphics3D code twice every frame, temporarily switching out the active camera for vrEye[0] and then for vrEye[1]. VRApp maintains two HDR framebuffers, two GBuffers, and two post-processing pipelines to seamlessly support this behavior. G3D's shadow maps are always memoized if shadow casters and lights have not moved between frames, so shadow maps are only rendered once and then reused for both eyes automatically.

Because of this design, most G3D::GApp subclasses can be switched to G3D::VRApp subclasses and even custom rendering that wasn't intended for VR will still work. Two great examples are the CPU ray tracer and GPU ray marcher, which use radically different rendering algorithms than the default GPU rasterization deferred shading pipeline.

|

| Limited field of view proposed by Fernandes and Feiner |

When the vrTrackedVolume is in non-teleportation motion due to controller input, a post-process erases portions of the screen outside of an artificially-narrowed field of view. I adopted this effect because it almost completely eliminates motion sickness for me during camera motion in Google Earth and Climbey, in agreement with scientific results by Fernandes and Feiner.

When developing a non-VR title, it is common to design effects by letting the frame rate fall below target in prototyping and then optimize it back up when the result is visually satisfactory. For VR, that's not acceptable. The developers will get sick if the program is not always hitting the target frame rate and latency. It is also very hard to judge the quality of visuals in VR when not at full frame rate.

To ensure that the experience hits its performance goals even while in development, G3D::VRApp automatically scales rendering quality. This behavior can of course be tuned and disabled if something in the scaled algorithms is what is being developed. The scaling monitors frame duration over successive periods of ten frames and adjusts the following when frame duration is too long:

- Motion blur and depth of field quality and radius

- Bloom radius

- Ambient occlusion radius and quality

- Rendering resolution

- Stereo (VRApp automatically drops to monocular vision as a last resort to attempt to halve frame duration)

Virtual Virtual Reality

Despite all of the effort of making G3D::VRApp able to automatically adapt non-VR content and applications to VR, we also have to handle the reverse. A G3D::VRApp that is run on a machine without an HMD needs to emulate an HMD in order to make development easier...sometimes we don't have enough HMDs for everyone on the team, or don't want to use an HMD when debugging a non-graphics interaction or simulation issue.

It is possible to configure a "null driver" under SteamVR, but this is complicated and error prone. It (unfortunately) does not appear to be an officially supported part of SteamVR. We're currently working on a design for emulating a HMD (and tracked controllers, even when they don't exist) but it is proving surprisingly hard to implement in an elegant manner. The two obvious alternatives are emulating the entire SteamVR API or having two complete paths for every location that calls into it.

Emulating SteamVR is tricky both because that API is constantly evolving and because SteamVR objects expect to be passed to one another's methods and support features not exposed in the public API. Duplicating all control paths is awkward because it spreads the emulation code across the entire VRApp.

An elegant solution might be to wrap SteamVR with a very similar API and then build an emulation subclass. Extra abstraction layers tend to just increase maintenance cost and lead to code bloat, but in this case it appears the most attractive solution...unless we can convince the SteamVR team to just provide native HMD and controller emulation!

Morgan McGuire (@morgan3d) is a professor of Computer Science at Williams College, visiting professor at NVIDIA Research, and a professional game developer. He is the author of the Graphics Codex, an essential reference for computer graphics now available in iOS and Web Editions.

Morgan McGuire (@morgan3d) is a professor of Computer Science at Williams College, visiting professor at NVIDIA Research, and a professional game developer. He is the author of the Graphics Codex, an essential reference for computer graphics now available in iOS and Web Editions.